Edge Computing and Its Impact on Network Topology

The Evolution Toward Edge Computing

For decades, network topologies have primarily been designed around centralized computing paradigms—first with on-premises data centers and then with cloud computing. This approach concentrates processing power, storage, and intelligence in core locations while treating edge locations primarily as data collection points or service delivery endpoints.

However, the explosive growth of Internet of Things (IoT) devices, real-time applications, and bandwidth-intensive services has begun to strain this model. Latency-sensitive applications like autonomous vehicles, industrial automation, augmented reality, and smart city infrastructure demand processing capabilities closer to data sources and end-users. This fundamental shift in requirements has catalyzed the evolution toward edge computing architectures.

Edge computing redistributes computing resources throughout the network, placing processing, storage, and intelligence closer to where data is generated and consumed. This approach doesn't replace centralized computing but complements it by creating a more distributed, multi-tier architecture that optimizes for different workload characteristics.

Redefining Network Topology for Edge Computing

The adoption of edge computing necessitates significant changes to traditional network topologies, creating new design patterns that balance distributed processing with centralized control:

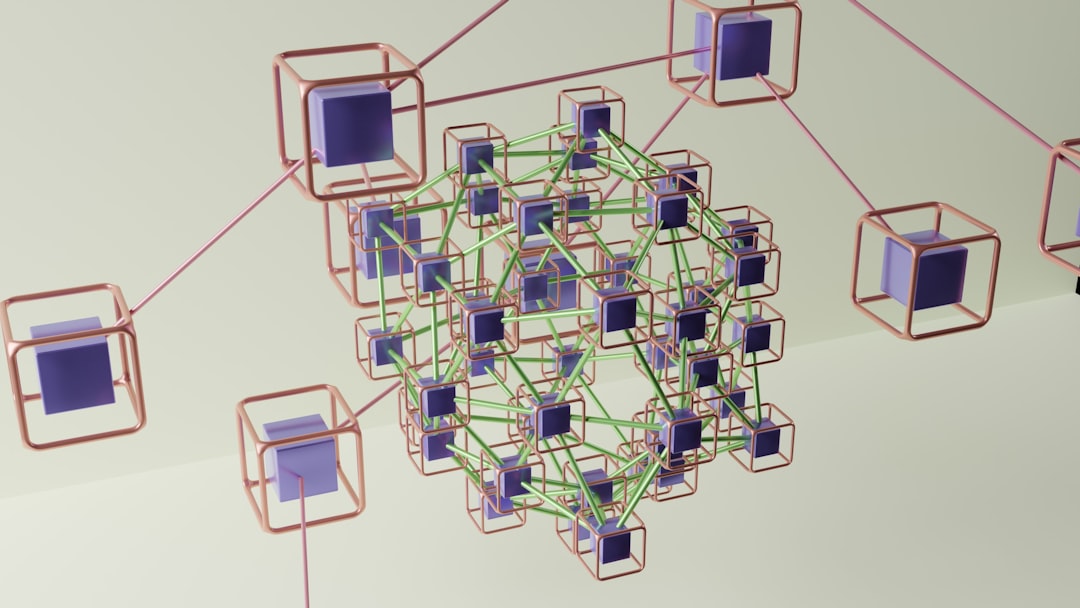

1. From Hierarchical to Mesh-Oriented Structures

Traditional network topologies often follow a strict hierarchy with clear delineation between access, distribution, and core layers. Edge computing blurs these boundaries, introducing:

- Distributed Processing Nodes: Compute resources positioned at strategic locations throughout the network

- Peer-to-Peer Communication: Direct interaction between edge nodes without requiring core mediation

- Multi-Path Connectivity: Redundant connections between edge nodes to improve resilience and throughput

These changes create more complex mesh-like structures that require sophisticated routing and orchestration mechanisms.

2. Multi-Tier Architectures

Edge computing introduces a spectrum of processing locations between end devices and central cloud resources:

- Device Edge: Processing directly on end devices (IoT sensors, smartphones, vehicles)

- Local Edge: Processing within the same facility (factories, buildings, campuses)

- Near Edge: Processing at network aggregation points (cell towers, neighborhood nodes)

- Regional Edge: Processing at metropolitan or regional facilities

- Core/Cloud: Centralized processing for non-latency-sensitive workloads

Each tier has different characteristics in terms of latency, bandwidth, processing capacity, and power constraints, creating a heterogeneous computing continuum.

Case Study: Smart City Traffic Management

A major metropolitan area implemented a multi-tier edge architecture for traffic management. Cameras and sensors process basic image recognition locally (device edge), while intersection-level controllers coordinate traffic light timing (local edge). District-level nodes optimize traffic flow across multiple intersections (near edge), and city-wide analysis for long-term planning occurs in regional data centers. This approach reduced latency for emergency vehicle prioritization from 2.7 seconds to 380 milliseconds while decreasing bandwidth requirements to the core by 76%.

3. Location-Aware Resource Allocation

Edge computing introduces the concept of location-awareness into network topology:

- Geo-Distributed Resources: Strategically positioned compute and storage based on user density and application needs

- Workload Proximity Optimization: Placing processing closest to where it's most efficient based on data gravity and latency requirements

- Context-Aware Networking: Adapting routing and quality of service based on physical location and network conditions

Performance Benefits of Edge-Centric Topologies

The redistribution of computing resources across edge locations yields several significant performance advantages:

1. Latency Reduction

Perhaps the most obvious benefit, edge computing dramatically reduces round-trip time for data processing:

- Physical Distance Minimization: Processing closer to data sources eliminates propagation delays

- Network Hop Reduction: Fewer intermediary devices in the communication path

- Local Decision Making: Time-critical functions executing without requiring distant approval

For applications like autonomous vehicles, industrial control systems, and augmented reality, reducing latency from hundreds of milliseconds to single-digit milliseconds can be the difference between viability and impracticality.

2. Bandwidth Optimization

Edge processing significantly reduces backhaul bandwidth requirements:

- Local Data Filtering: Processing raw data locally and transmitting only relevant information

- Hierarchical Aggregation: Consolidating and summarizing data at each network tier

- Distributed Caching: Storing frequently accessed content closer to users

This optimization is particularly valuable for video analytics, IoT sensor networks, and content delivery applications where raw data volumes would otherwise overwhelm network capacity.

3. Resilience and Autonomy

Edge-centric topologies enhance system resilience:

- Reduced Dependency on WAN Links: Critical functions can continue despite core connectivity issues

- Graceful Degradation: Systems can maintain essential functionality during partial outages

- Localized Fault Domains: Problems affect smaller portions of the overall system

| Metric | Traditional Cloud | Edge Computing | Improvement |

|---|---|---|---|

| Average Latency | 80-150 ms | 5-25 ms | 80-95% |

| Backhaul Bandwidth Usage | 100% (baseline) | 15-40% | 60-85% |

| Offline Functionality | Minimal | Moderate-High | Significant |

| WAN Dependency | High | Low-Moderate | Substantial |

Security Implications of Edge Computing

The distributed nature of edge computing creates both security challenges and opportunities:

1. Expanded Attack Surface

Edge computing inherently increases the number of potential entry points:

- Physical Security Challenges: Edge nodes often reside in less controlled environments

- Heterogeneous Device Ecosystem: Diverse hardware and software platforms with varying security capabilities

- Multiple Trust Domains: Processing may span different ownership and administrative boundaries

2. Security Architecture Adaptations

Addressing edge security challenges requires fundamental architectural changes:

- Zero Trust Architecture: Assuming no inherent trust regardless of network location

- Distributed Identity: Managing authentication and authorization across distributed nodes

- Micro-Segmentation: Creating fine-grained security zones with strict policy enforcement

- Hardware Root of Trust: Leveraging secure hardware elements to establish device identity and integrity

3. Data Protection Strategies

Protecting data in a distributed environment requires careful consideration:

- Contextual Encryption: Applying different protection levels based on data sensitivity and location

- Secure Enclaves: Using trusted execution environments for processing sensitive data at the edge

- Minimized Data Footprint: Retaining only necessary information at each tier

# Python pseudocode for edge security zoning

def configure_edge_security_zones(topology_map):

"""Create security zones for an edge computing deployment"""

zones = {}

# Identify all edge nodes

edge_nodes = topology_map.get_nodes(type="edge_compute")

# Group nodes by geographic proximity

geographic_clusters = cluster_by_location(edge_nodes)

for cluster_id, nodes in geographic_clusters.items():

# Create a security zone for this cluster

zone = SecurityZone(f"edge-zone-{cluster_id}")

# Determine security controls based on node characteristics

physical_security_level = min([node.physical_security_rating for node in nodes])

data_sensitivity = max([node.data_sensitivity_level for node in nodes])

# Apply appropriate security controls

if physical_security_level < SECURITY_THRESHOLD:

zone.add_control(EncryptionAtRest(ENCRYPTION_LEVEL.HIGH))

zone.add_control(TamperResistance(TAMPER_LEVEL.ENHANCED))

zone.add_control(AnomalyDetection(SENSITIVITY.HIGH))

# Configure inter-zone communication policies

for other_zone in zones.values():

policy = CommunicationPolicy(zone, other_zone)

policy.require_mutual_tls = True

policy.require_request_verification = True

# Additional controls for sensitive data flows

if zone.data_sensitivity > SENSITIVITY_THRESHOLD or other_zone.data_sensitivity > SENSITIVITY_THRESHOLD:

policy.require_payload_encryption = True

policy.allow_protocols = ["TLSv1.3"]

topology_map.add_policy(policy)

zones[cluster_id] = zone

return zonesImplementation Strategies for Edge Computing

Successfully implementing edge-centric network topologies requires careful planning and execution:

1. Edge Node Placement Optimization

Strategic placement of edge computing resources maximizes performance benefits:

- Traffic Pattern Analysis: Positioning resources based on user concentration and data flows

- Latency Mapping: Identifying geographic locations that optimize round-trip times

- Iterative Deployment: Starting with high-impact locations and expanding based on measured results

2. Workload Classification and Distribution

Not all workloads benefit equally from edge deployment:

- Latency Sensitivity Analysis: Identifying applications that benefit most from reduced latency

- Data Gravity Assessment: Evaluating where data is produced and consumed

- Processing Profiles: Analyzing compute, memory, and storage requirements for different application components

This analysis enables intelligent decomposition of applications across the computing continuum, with components placed at the most appropriate tier.

3. Orchestration and Management

Managing distributed edge resources requires sophisticated orchestration:

- Hierarchical Management: Coordinating resources across tiers with appropriate delegation

- Centralized Policy, Distributed Execution: Defining rules centrally but enforcing them locally

- Automated Deployment: Using CI/CD pipelines adapted for edge environments

- Configuration Management: Maintaining consistency across heterogeneous edge nodes

Real-World Edge Computing Implementations

Case Study 1: Industrial IoT Edge Network

A multinational manufacturer implemented an edge-centric architecture for their production facilities:

- Topology Design: Three-tier architecture with machine-level processing, production line aggregation, and facility-level analysis

- Implementation Approach: Standardized compute modules with containerized applications deployed via a centralized orchestration platform

- Network Architecture: Isolated operational technology networks with secure gateways to business systems

- Results: 98% reduction in critical control loop latency, 87% decrease in cloud data transfer, and real-time anomaly detection that prevented several potential production outages

Case Study 2: Telecommunications Edge Infrastructure

- Deployment Scenario: National telecommunications provider implementing Multi-access Edge Computing (MEC) at cell sites

- Architecture: Distributed compute nodes at cell towers connected to regional data centers, with workload mobility between tiers

- Application Focus: Video content delivery, augmented reality services, and IoT data processing

- Performance Impact: 68% reduction in content delivery latency, 42% decrease in backhaul traffic, and support for new low-latency services

Network Design Patterns for Edge Computing

Several design patterns have emerged to address the unique challenges of edge computing networks:

1. Hub-and-Spoke with Local Processing

This pattern maintains a hierarchical structure but adds processing capability at spoke locations:

- Local Data Processing: Edge nodes filter, aggregate, and analyze data before forwarding

- Centralized Control Plane: Management and orchestration remain hub-centric

- Selective Data Forwarding: Only relevant data or insights propagate upward

This approach balances the benefits of edge processing with the simplicity of centralized control.

2. Distributed Mesh with Federated Control

A more advanced pattern that enables greater autonomy and peer communication:

- Peer-to-Peer Communication: Edge nodes communicate directly for coordinated actions

- Federated Decision Making: Control distributed across nodes with consensus mechanisms

- Local Autonomy: Nodes can function independently when disconnected from centralized systems

This pattern maximizes resilience and minimizes latency but introduces greater complexity.

3. Hierarchical Processing Tiers

This pattern creates a processing continuum with specialized functions at each tier:

- Real-Time Processing: Lowest tier handles immediate, time-sensitive operations

- Tactical Analysis: Middle tiers perform intermediate aggregation and analysis

- Strategic Intelligence: Higher tiers focus on long-term patterns and cross-system integration

This approach optimizes different types of processing based on their temporal and spatial requirements.

Future Trends in Edge Network Topology

Several emerging trends will shape the future evolution of edge-centric network topologies:

1. AI-Driven Topology Optimization

Machine learning algorithms are increasingly being applied to optimize edge network topologies:

- Dynamic Resource Allocation: Automated adjustment of processing distribution based on changing demands

- Predictive Placement: Positioning resources based on anticipated usage patterns

- Self-Healing Networks: Autonomous reconfiguration in response to failures or performance degradation

2. Edge-Native Application Architectures

Application design is evolving to fully leverage edge capabilities:

- Decomposed Microservices: Applications built as distributable components that can be placed optimally across the edge-to-cloud continuum

- Location-Aware Logic: Applications that adapt behavior based on where components are deployed

- Latency-Adaptive Algorithms: Processing that adjusts precision and methods based on available response time

3. Converged Infrastructure at the Edge

The traditional separation between network, compute, and storage is blurring at the edge:

- Programmable Network Devices: Switches and routers that can host application components

- In-Network Computing: Processing data as it flows through the network rather than at endpoints

- Smart NICs and DPUs: Specialized hardware that offloads networking, security, and storage functions

Conclusion

Edge computing represents a fundamental shift in network topology design—moving from centralized architectures to distributed processing models that place intelligence throughout the network. This transformation enables new classes of applications with stringent latency requirements while optimizing bandwidth usage and enhancing system resilience.

The impact on network topology is profound, requiring new approaches to connectivity, security, and management. Traditional hierarchical designs are giving way to more flexible, mesh-oriented structures with processing distributed across multiple tiers. This evolution creates both challenges and opportunities for network architects and system designers.

As edge computing continues to mature, we can expect further innovation in topology design, with increasing automation, intelligence, and adaptability. The most successful implementations will balance the benefits of distributed processing with the need for coherent management and security, creating systems that can seamlessly span from edge to cloud.

Organizations embarking on edge computing initiatives should focus on strategic node placement, careful workload distribution, and robust orchestration capabilities. By thoughtfully redesigning network topologies to embrace edge principles, they can unlock significant performance improvements and enable entirely new capabilities that were previously impractical with centralized architectures.