Microservice Architecture for Distributed Data Processing

The Evolution of Distributed Data Processing

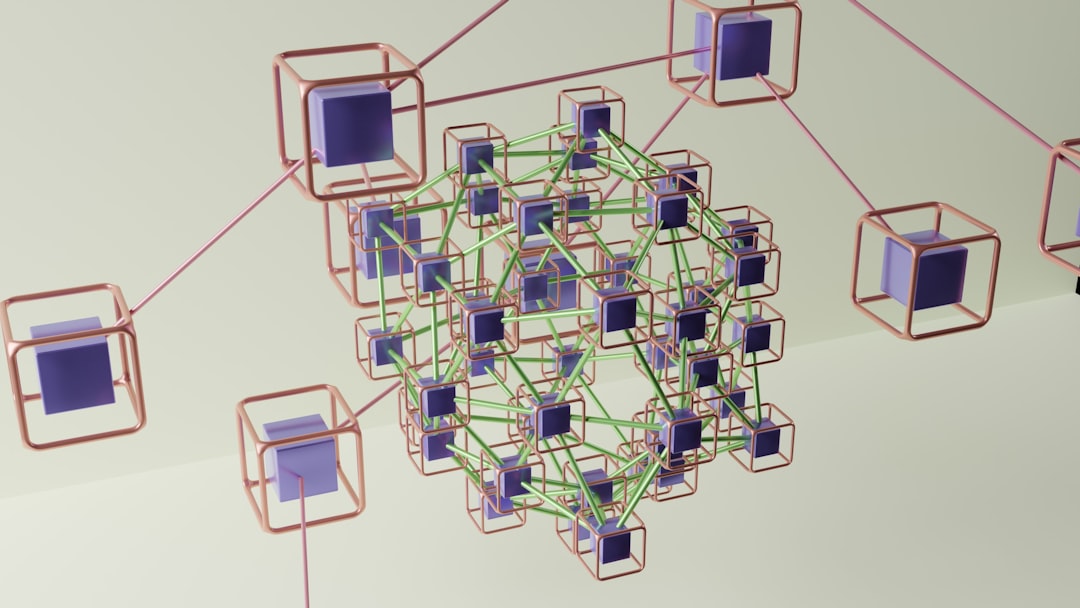

The landscape of data processing has undergone a profound transformation in recent years. Where monolithic applications once dominated, we now see distributed architectures composed of specialized, independent services working in concert to process vast amounts of data across global networks.

This evolution has been driven by several factors: the exponential growth in data volume, increasing complexity of processing requirements, and the need for greater resilience and scalability. Microservice architecture has emerged as a dominant paradigm for addressing these challenges, offering a flexible approach to building systems that can efficiently handle distributed data processing workloads.

Core Principles of Microservice Architecture

At its foundation, microservice architecture embodies several key principles that make it particularly well-suited for distributed data processing:

1. Service Autonomy

Each microservice operates as an independent unit with its own data store, business logic, and well-defined API. This autonomy enables:

- Independent deployment and scaling based on specific processing demands

- Isolation of failures to prevent cascading system breakdowns

- Technology stack flexibility tailored to specific data processing needs

2. Domain-Driven Design

Organizing services around business capabilities rather than technical functions creates a more natural alignment with data processing workflows:

- Services own complete vertical slices of functionality

- Domain boundaries prevent tight coupling between disparate systems

- Business logic and associated data remain cohesive

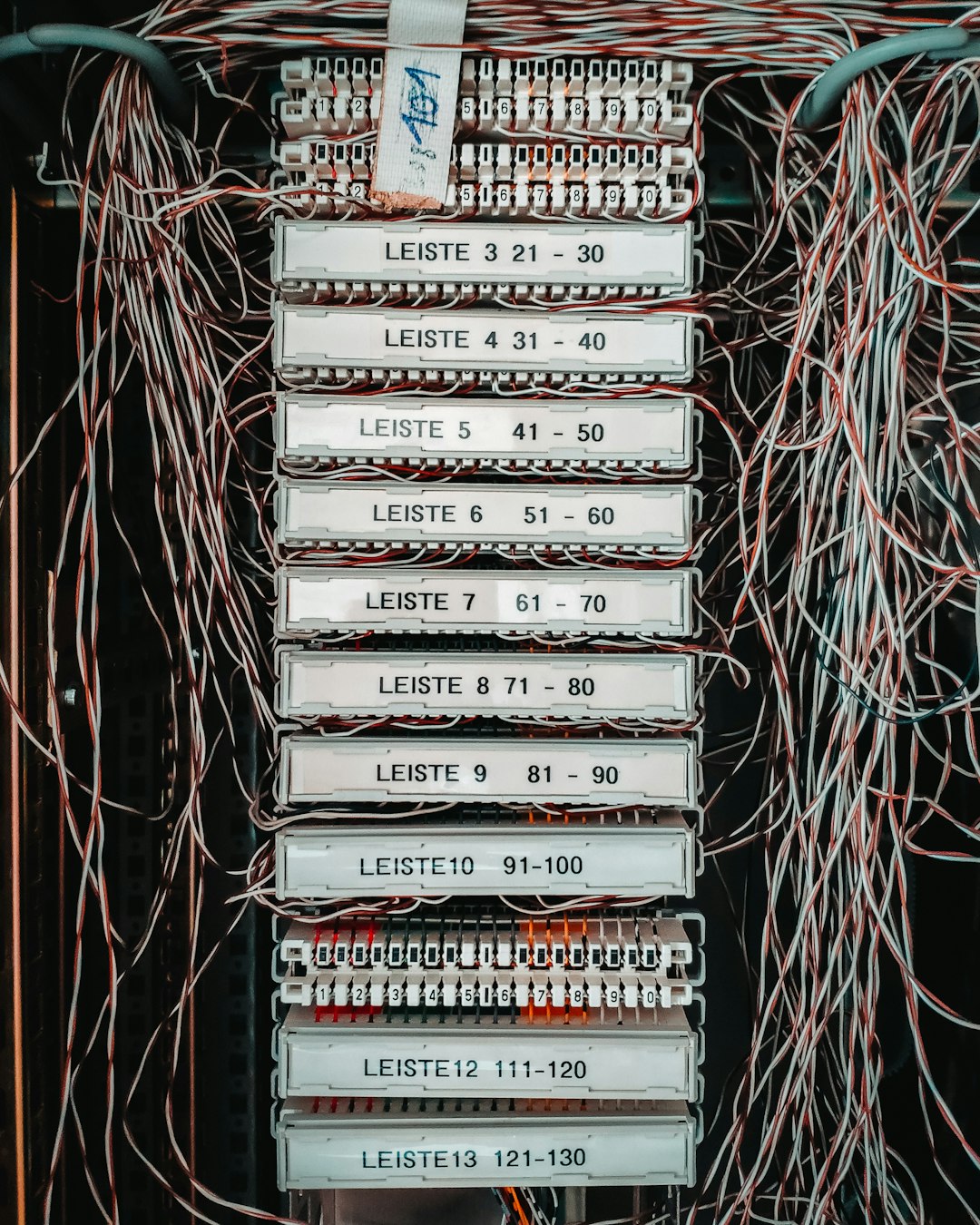

3. Decentralized Data Management

Unlike monolithic systems with centralized databases, microservices employ a distributed data approach:

- Each service maintains its own data store optimized for its specific needs

- Polyglot persistence allows choosing appropriate database technologies for different data types

- Data consistency is maintained through asynchronous communication patterns

Case Study: E-commerce Data Processing Transformation

A major e-commerce platform decomposed its monolithic order processing system into specialized microservices handling inventory, payment processing, shipping logistics, and customer notifications. By applying domain-driven design principles, they achieved 99.99% uptime during peak shopping seasons and reduced data processing latency by 76%, even as transaction volume grew by 300% over two years.

Architectural Patterns for Distributed Data Processing

Several proven patterns have emerged for implementing effective distributed data processing using microservices:

1. Event-Driven Processing

In event-driven architectures, services communicate through events representing significant state changes:

- Event Sourcing: Storing all state changes as an immutable sequence of events, providing a complete audit trail and enabling temporal queries

- CQRS (Command Query Responsibility Segregation): Separating read and write operations to optimize for different data access patterns

- Message Brokers: Utilizing systems like Apache Kafka, RabbitMQ, or Amazon SNS/SQS to reliably deliver events between services

This approach enables loose coupling between services and provides natural resilience against processing failures.

2. Stream Processing Pipelines

For continuous data processing requirements, stream processing architectures excel:

- Stream Processors: Specialized services that transform, enrich, or analyze data streams

- Windowing Operations: Processing data within defined time or count boundaries

- Stateful Processing: Maintaining context across multiple events for complex analytics

Technologies like Apache Flink, Kafka Streams, and Spark Structured Streaming provide robust frameworks for implementing these patterns.

3. API Gateway Pattern

API gateways provide a unified entry point for distributed data processing operations:

- Request Routing: Directing client requests to appropriate microservices

- Composition: Aggregating responses from multiple services to simplify client interactions

- Protocol Translation: Converting between different communication protocols as needed

4. Saga Pattern for Distributed Transactions

The Saga pattern addresses the challenge of maintaining data consistency across services:

- Choreography: Services publish events that trigger subsequent processing steps

- Orchestration: A central coordinator manages the sequence of operations across services

- Compensating Transactions: Defined rollback operations for each step to maintain eventual consistency

# Python pseudocode for a choreographed saga

# Payment Service

@event_listener("order_created")

def process_payment(order_event):

payment_result = payment_gateway.charge(

amount=order_event.total_amount,

payment_method=order_event.payment_method

)

if payment_result.success:

event_bus.publish(Event("payment_processed", {

"order_id": order_event.order_id,

"transaction_id": payment_result.transaction_id

}))

else:

event_bus.publish(Event("payment_failed", {

"order_id": order_event.order_id,

"reason": payment_result.failure_reason

}))

# Inventory Service

@event_listener("payment_processed")

def reserve_inventory(payment_event):

order = order_repository.get(payment_event.order_id)

inventory_result = inventory_manager.reserve_items(order.items)

if inventory_result.success:

event_bus.publish(Event("inventory_reserved", {

"order_id": payment_event.order_id,

"inventory_reservation_id": inventory_result.reservation_id

}))

else:

# Trigger compensation - refund payment

event_bus.publish(Event("inventory_reservation_failed", {

"order_id": payment_event.order_id,

"transaction_id": payment_event.transaction_id

}))Integration Challenges and Solutions

While microservices offer significant advantages for distributed processing, they also introduce several challenges that must be addressed:

1. Service Discovery and Configuration

In dynamic environments where services scale and migrate, locating service instances becomes complex:

- Solution: Implement service registry systems (like Consul, etcd, or Eureka) with automatic registration and health checking

- Centralized Configuration: Externalize configuration to allow runtime adjustments without redeployment

2. Resilient Communication

Network failures and service unavailability are inevitable in distributed systems:

- Circuit Breakers: Prevent cascading failures by stopping calls to failing services

- Retry with Backoff: Automatically retry failed operations with exponential delays

- Bulkheads: Isolate resources to prevent one slow operation from consuming all available connections

3. Distributed Monitoring and Tracing

Tracking operations across multiple services requires specialized observability tools:

- Distributed Tracing: Systems like Jaeger or Zipkin that track request flows across services

- Centralized Logging: Aggregating logs with context-preserving correlation IDs

- Metrics Aggregation: Collecting performance data to identify bottlenecks

4. Data Consistency

Maintaining consistency without distributed transactions is a fundamental challenge:

- Eventual Consistency: Accepting that data will temporarily be in inconsistent states

- Outbox Pattern: Using a local transaction to update both service state and an outbox message table

- CDC (Change Data Capture): Propagating data changes by monitoring database transaction logs

Performance Optimization Strategies

Optimizing performance in microservice-based data processing requires attention to several key areas:

1. Data Locality and Caching

- Positioning data close to processing services to minimize network latency

- Implementing distributed caching layers with technologies like Redis or Hazelcast

- Using read-replicas for geographically distributed access patterns

2. Asynchronous Processing

- Decoupling time-intensive operations from request handling

- Implementing backpressure mechanisms to handle traffic spikes

- Using non-blocking I/O to maximize resource utilization

3. Resource Efficiency

- Right-sizing service instances based on processing requirements

- Implementing auto-scaling based on workload patterns

- Optimizing container footprint to reduce overhead

Implementation Case Studies

Case Study 1: Financial Data Processing Platform

A global financial services company implemented a microservice architecture for their transaction processing system with impressive results:

- Architecture: 27 domain-specific microservices processing 30,000 transactions per second during peak periods

- Approach: Event-driven architecture with Kafka as the backbone, implementing CQRS with specialized read models

- Results: 99.999% availability, 80% reduction in time-to-market for new features, and the ability to scale individual processing components independently

Case Study 2: IoT Data Processing System

- Challenge: Processing data from millions of IoT devices generating terabytes daily

- Solution: Stream processing architecture with tiered storage (hot/warm/cold paths) and domain-specific processing microservices

- Technology Stack: Kafka for ingestion, Flink for stream processing, and specialized time-series databases

- Outcome: 99.95% data processing SLA with the ability to handle 2x forecasted device growth without architecture changes

Future Trends in Microservice Data Processing

Several emerging trends are shaping the future of microservice-based data processing:

1. Serverless Data Processing

Function-as-a-Service (FaaS) platforms are increasingly being used for event-driven data processing, offering automatic scaling and pay-per-execution pricing models. AWS Lambda, Azure Functions, and Google Cloud Functions are being integrated into microservice architectures to handle burst processing needs without maintaining idle capacity.

2. Mesh Architectures

Service meshes like Istio, Linkerd, and Consul Connect provide sophisticated traffic management, security, and observability for microservice communications. By externalizing these concerns from application code, service meshes are enabling more reliable and secure distributed data processing.

3. Edge Processing

To reduce latency and bandwidth usage, data processing is increasingly being pushed to the network edge. Microservices deployed at edge locations can perform initial filtering, aggregation, and analysis before transmitting reduced data sets to central processing services.

Best Practices for Implementation

Based on industry experience and case studies, several best practices have emerged for implementing microservice architectures for distributed data processing:

- Start with Domain Analysis: Carefully model domain boundaries before service decomposition

- Design for Failure: Assume services will fail and implement appropriate resilience patterns

- Embrace Asynchronous Communication: Use event-driven patterns to decouple services

- Implement Observability from Day One: Build comprehensive monitoring, logging, and tracing

- Automate Everything: From deployment to scaling to testing

- Use Bounded Contexts: Keep services focused on specific business capabilities

- Implement Circuit Breakers and Bulkheads: Protect against cascading failures

- Design Idempotent Operations: Ensure operations can be safely retried

- Minimize Synchronous Chains: Avoid deep synchronous dependencies between services

- Document API Contracts: Maintain clear interface specifications between services

Conclusion

Microservice architecture has proven to be a powerful paradigm for distributed data processing, offering unprecedented flexibility, scalability, and resilience. By decomposing complex processing workflows into independent, focused services, organizations can build systems that adapt to changing requirements and handle massive data volumes with high reliability.

While implementing this architecture comes with significant challenges related to service integration, data consistency, and operational complexity, well-established patterns and practices have emerged to address these issues. Technologies like event streaming platforms, container orchestration, service meshes, and distributed tracing systems provide robust foundations for building effective microservice ecosystems.

As we look to the future, the continued evolution of serverless platforms, edge computing, and AI-driven operations will further enhance the capabilities of microservice architectures for distributed data processing, enabling even more sophisticated and efficient global digital networks.